All NVMe SSD Array in Unraid

/This is a very simple examination/case study of what may happen if you use all NVME drives in an Unraid Array. Mmmm, data is beautiful. There is so much that needs to be stated before just jumping into the charts and results, so let’s get moving!

Hardware

The Receiving Unraid Server

These are terrible NVMe drives but considering I found them for $50 buckaroos, it’s hard to complain. In order to assist us with the test, I used an Asus M.2 Expansion card, which allows us to put 4 NVMe drives into my server.

Each drive is running at 4x PCIe speeds, because the x16 lane is being bifurcated (fancy word for divided) into x4x4x4x4 lanes.

The only note worthy hardware here are the Intel 10GbE PCIe cards, an X540-T2 and X520-T2.

The Transmitting Server

Ryzen based server, standard Unraid configuration, SATA disks and NVMe Cache. You can read about it here if you want. Just know it has changed a lot since the time of writing.

Topology

Unraid to Unraid file transfer using 10Gb networking between the two hosts. Both servers are running Unraid Version 6.8.3

Unraid MTU/Jumbo Frames

Both the Sender and Receiver have their MTU set to 9000.

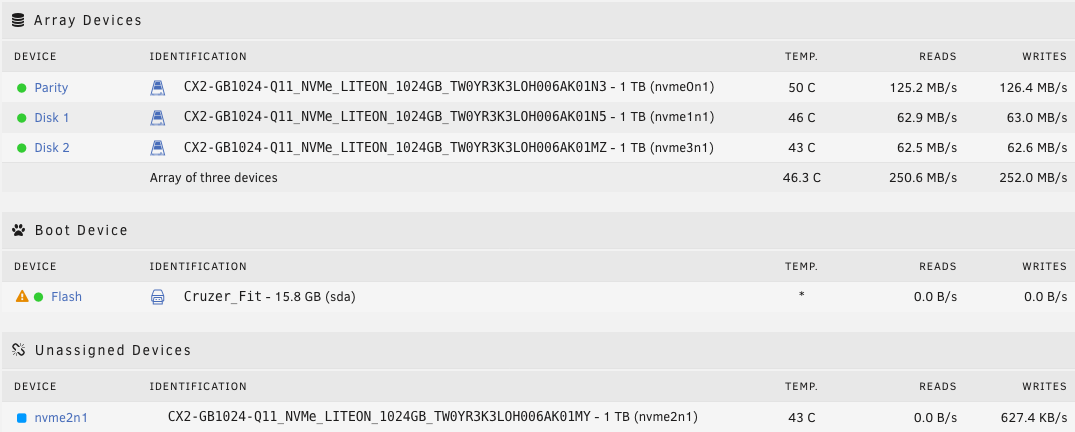

The Array

For this hardware setup I am only using 1TB drives as follows:

1TB Parity

1TB data disk

1TB data disk

No cache drives

As you probably observed and noted, there are 5 available drives but only 3 in use. You may also be wondering why there are only 3 NVMe drives in the array when earlier I said 4, well 1 drive started to fail miserably during the test and had to be dropped from the array.

Test Setup

Definitions

trim - a command typically given and handled by the operating system to notify the the SSD to zero out (remove) data from certain sectors of it’s storage, in order to place new data in that place

Run - a single instance of the script transferring data 40 or more times from the remote host to the test host

Procedure

Transfer data

Record transfer speed

Record time to completion of each transfer

Parity Check

Delete all data from the array

repeat

Steps 1-3 Transfer Data and Record Points of Interest

In order to conduct this step, I downloaded the User Scripts plugin so that I could run a script repeatedly and as a background process as to not interfere with my user experience with my primary server. The script simply copies a file 40 times filling the array and writes the results to a CSV file that we used to gather data points. The file we are using is a 48GB file. Here is the script.

#!/bin/bash

i="0"

while [ $i -lt 41 ]

do

script -q -c "echo run-$i;scp -r /mnt/user/Videos/Completed/2020/oeveoCableTrayFinal.mov

172.16.1.10:/mnt/user/downloads/$i-oeveoCableTrayFinal.mov" >> /tmp/results12.csv

i=$[$i+1]

sleep 10

doneSample Output

Below is a screenshot of the imported CSV file that is created by the script. It is totally unaltered in this picture.

Step 4. Parity Check

I decided that we should kick off a Parity Check after the completion of each run because in theory, we would be negatively effected by the lack of trim support with drives in the array. At some point we should have been able to observe poor read/write performance. Conducting a Parity Check would hopefully show us errors, it never did.

Step 5. Deletion

We deleted every single 48GB video file because well, we needed to run the tests multiple times in hopes of seeing how the lack of trim support for array devices negatively effects write performance.

Step 6. Repeat

Repeat the previous steps until bored or when we finally see something.

Test Results

For now, we will just say the results are inconclusive but we will talk more about that later. You should know, I gave up after a certain point because I felt like I wasn’t seeing any performance loss after transferring roughly 42,000TB’s of “data” from server to server. Below is a sample of my final 12 runs before calling it quits. We will talk about why only 12 results are displayed later.

Average Transfer Speed

Average Time to Complete Transfer (minutes:seconds)

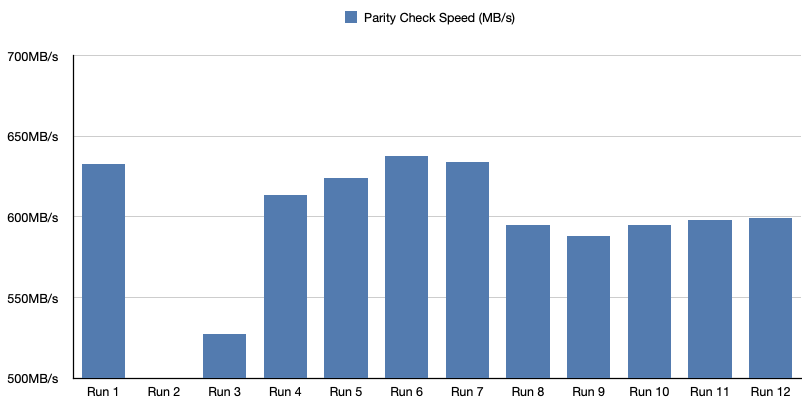

Average Parity Check Speed

Run 2 did not finish due to drive failure

Average Duration of Parity Check

Run 2 did not finish due to drive failure

Test Sources

Dropping a link here for anyone who may be curious and want access to the CSV files that contain all the data for their own look.

Issues During Experimentation

Issue 1

I ran into a few of self inflicted issues and one unexpected issue. The first one is by far the worst and a huge blow to my motivation to continue testing. Each exported results#.csv is saved in the /tmp directory. Well if you don’t know, /tmp is a directory in RAM. So, after a system crash, I lost the first 10 runs. That’s right. This would have been a 22 run beast if I didn’t lose the results. I did manage to save runs 11 and 12 and then renamed them to run 1 and run 2 as my way of starting over. If you open one of the CSV files, you will actually see how I started with 50 transfers per run. Gods, it would have been so cool. All in I transferred about 42,000++TB of data between the two servers.

Issue 2

The second failure happened in Run 2 (actually run 12). One of the NVME drives spit out errors like mad during a parity check. My “share” disappeared which caused me to remove the drive from the array and step down to 3 drives instead of 4, for all the remaindering tests. It turns out I may have killed one of the drives. I tried to continue testing with the bad drive but it continued to spit out errors during each run and well, ain’t nobody got time for that.

Issue 3

In Run 9, the Data Transmitter (remote server) started a Parity Check at the tail end of the final few transfers. I discarded those results for the information that is displayed.

Issue 4

In a couple of the CSV files, there are duplicate transfer speeds. Instead of taking the time to delete any of the duplicate data points, I figured that due to the data size, those duplicates would have near insignificant impact to the the overall test results. If you feel slighted by my decision, feel free to parse through the data and make the necessary adjustments.

A Second Look

So in the interest of making sure trim wasn’t an issue. I stopped the array changed the SSDs to cache drives in a RAID10 setup. Then manually executed the trim command.

Something happened after executing the command. After that completed I then set a new config again and changed the drives to be back in the Array again with no cache drives.

Then once again I kicked off the scripts as soon as the array was set back up and there was no change in results immediately following the changes.

A Third Look

Because something seemed off with the throughput I decided to swap out the receivers Intel X520-T2 for a Aqtion AQC-107 card. At first there were peaks of 167MB/s, which I have seen before, but then it would slowly fall down to 133~136MB/s. Yes, even on the 3rd look the MTU on both servers is still set to 9000. Right off the bat, it didn’t seem to make a significant difference.

Questions

Why did you do this?

I was very curious and it was really bothering me. 90% of the things I read basically just eluded to this is a bad idea and don’t do it. The most details I could find were still not very helpful. Things like, Unraid does not support Trim in the array and because of that SSDs won’t know which blocks of data can be erased because they are no longer needed. Okay, but how when will I see the loss of performance? How much loss of performance should I expect? What happens if the SSD is filled to the brim, does it just stop working? Nobody seems to have answers to these questions.

Why is the performance so consistent?

I’m actually not sure why things work as well as they did. I was expecting incredibly slow writes on each successive test. As soon as all the data was deleted from the array, I started the next run. I should have seen something wrong, right?

When will I see the loss of performance?

I too still want answers to this question and have yet to find or understand it. This seems to be a question that is very dependent on the SSD manufacturer and the architecture of the SSD in question. I think Lite-On may have done something correctly here.

How much performance loss should I expect?

Unfortunately this is something I was unable to answer and seems to be very dependent from brand to brand. I would need more SSDs in order to really test this one all the way through. But compared to my old setup, instead of getting a burst speed of 112MB/s (cache fills) then dropping to 54MB/s~70MB/s, it seems like an all SSD array will do 100+ consistently. But, from my experience, it’s still much slower than using cache nvme drives, because you can hit 1GBps that way. I’ve done it and it’s amazing.

Is there a better way?

God yes, if performance is your goal, you should be using NVME or SSDs in a RAID 0 or RAID 10 as cache. That will give you the most performance and give you Operating System support for trim.

Why is trim so important?

Short answer is, trim allows for background data block deletion and reduces wear on the SSD. According to Wikipedia. How does it reduce wear on the ssd? I’m not really sure. But the background clean up, means that when the SSD is idle it can delete data as told by the OS and basically always have blocks available for writing.

Do I need an all SSD array?

Well I can’t truly answer that but again, if you are looking for great performance, RAID 10 the cache drives and boom. You are good to go. If you are looking for some high speed storage server were there are like 10 or more people using the dang thing at the same time….. well my friend, Unraid probably isn’t what you are looking for.

What happens if you do the transfer from the opposite direction?

Well, I didn’t do it because all of this only really effects write speeds not read speeds. I didn’t see the relevancy so I didn’t do it. But to answer your question anyway, I got 156.2MB/s.

How do I know if my SSD’s support Trim?

In Unraid if you run the command “ lsblk -D”, if the Disc-Max column has a 0B next to the drive in question, then it does not support trim. Here is an example. The NVME drives in question here, do in fact have trim/discard support because in column Disc-Max, they have 2T listed.

Does the Unraid Dashboard show 10000Mbps?

This screenshot only shows one of the servers. Since the post was originally written, PNAS has been modified for a separate test and the hardware has changed. Thus I can only show you a screenshot of Transcencia, the Source/Sender in this test. Transcencias’ configuration has yet to change from this test.

Conclusion

In the end we learned that yes you can run an all SSD array if you so choose. The performance is pretty okay judging from my scenario. However, if our goal is performance, this setup doesn’t make any sense. We would be bettered served to do a RAID0 or RAID10 with our ssds and see some more than stellar results. Also, to mention, using the drives in cache also enables the OS to use the trim command and get those unneeded blocks zeroed out.

I think I’ve manage to raise more questions and managed to not answer any. I do however, feel like I got somewhat satisfactory results in the sense that I no longer wish to explore the what ifs. Well maybe slightly. I would like to try with a different brand and with more high performance ssds. Just to see what could be left out on the table.